This week started off with a bang: I successfully built a program in Python that scraped Newbery Honors winners from a website and dumped them into a .csv file that I could, in turn, dump into Google Sheets for the team to investigate.

As has been the case with much of my work in DH so far, the breakthrough was made possible by a combination of online tutorials, trial and error, and logic. While I’d consulted tutorials last week, the majority of them walked me through sample scrapes that were unique to the site being scraped. Monday, though, I found a good introductory tutorial that focused on the big picture of web scraping as it worked through its sample rather than merely giving me code. The tutorial stressed how essential it is to study the architecture of the HTML you want to scrape first—to be able to articulate the sequence of the patterns of code that can isolate the data you need.

In the case of the site I found, the HTML was fairly basic and ambiguous. Instead of using helpful designations such as consistent and unique spans and classes to indicate titles, authors, and dates, the code organized all of the information on the page—introduction, visuals, and Honor book data alike—in rows in a table. So, I had to first use trial and error to isolate the rows that contained the book data, which turned out to be the range of cells [-323:-2]. (Yes, I realize that my range could have been positive, but I thought it easiest to work backward, since the book data extended to nearly the bottom of the page.) The dates of the Honors were tucked, fortunately, in separate cells in the table with a class designation of <td class=”order”>, so that was easy enough to scrape. But both the title and the author for each book were nestled in one table cell for each book, and each had its own anchor tag, which made for tricky disambiguation.

Fortunately, I’d seen Patrick Smyth in a workshop last year parse text by a specific word—maybe it was bylines in newspaper articles, though I don’t really recall. So, employing a bit of logic, I extracted the text of the <td> cells without the class of “order,” and then split that text at the “ by ” juncture, assigning the text prior to the it ([0]) as the title, and after it ([-1]) to the author. Worked like a charm. The rest of the code, which was easy to adapt from the tutorial, turned all of that into a dataframe (a new term for me!) suitable for exportation to a .csv file.

I’m particularly proud of the program for two reasons. First, it is only 17 lines of actual code—far more efficient than any other Python program I’ve written. Second, I employed a trick I learned in Patrick’s software lab last spring: writing comments as a way to solidify my own understanding of my code. So, I feel I am ready to continue to scrape sites as our crew looks at other award winners. (This practice proved to have extra benefits, as our team is interested in learning from each other, and Emily happily mentioned in Tuesday’s class that the comments helped her understand the code too.)

After generating the .csv file, I cleaned the data as I had done with Georgette’s set last week. Again, I saw and documented a slew of potential issues that might prove tricky as we bring this data into Tableau, such as accent marks in author names and even an obviously erroneous attribution of an author as “See and Read” rather than Miska Miles. With both data sets on the Medal winners and Honors winners cleaned, I popped them into Google Sheets for us all to explore and expand. The rest of the week was spent adding to the data set beyond what scraping can do, as Emily and I began to investigate the identities of the books’ protagonists as well as the identities of the Honor authors.

As has been my experience with all data gathering, the realities of the data revealed limitations in our spreadsheet. For example, we didn’t have a uniform approach to protagonists who weren’t human (some were animals, some animals’ nationalities actually mattered in the story, one protagonist was a steam engine, and one story revolved around a family of dolls). Nor did we have a way to indicate multiple protagonists, as is the case for collections of stories or novels with sibling pairs or families in main roles.

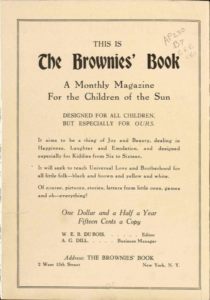

As our team discussed in both of our Skype sessions so far, the most important task this upcoming week is to resolve how to deduce, ascribe, and name the identity markers we have already begun to record. As my own students have been studying the Harlem Renaissance this winter, I am reminded that the inaugural year of the Newbery Awards came on the heels of the two-year experiment of the Brownies’ Book—a magazine created by W.E.B. Du Bois and Jessie Fauset to provide black American kids a way to see themselves in print, countering either the predominant white faces normalized in children’s literature of the time or the stereotypes of nonwhite children that abounded. (In fact, I found a 1919 letter from Du Bois responding himself to an inquiry about the use of racial terms in the Brownies’ Book in which he writes, “So long as the masses of educated people are agreed upon the significance of a word, it is impossible for you or me to ignore it, simply because we do not like it.”) So, Georgette will reach out to a host of experts in addition to the help she’s already found from founder of the Diverse Book Finder, and Meg and I will scour the online literature in hopes of additional clarity as we move forward.

As I’ve learned that the Brownies’ Book is due for rerelease this year, in honor of the 100 years since its initial publication, our work seems very timely.

Inside cover of the 1920s edition of The Brownies Book. From https://www.loc.gov/item/22001351/